Keeping up with industry news may be tedious, especially now when new AI-based products are launched daily. It’s tempting to ignore all of this and focus on work. Unfortunately, it would mean risking missing out on significant developments. So, we subscribe to newsletters, let others read all the news, and send us a summary. Soon, we receive a dozen newsletters daily, and we are still not keeping up. Worse, most of the news is not relevant to us.

Table of Contents

I work with text and occasionally with images. If tomorrow someone releases an AI model that can generate music, I won’t care (but call me if your model can bring back Jim Morrison). If a cool, new startup got millions of funding, it’s irrelevant to me. Only a fraction of those news matters. But I still have to read all of them to find the relevant ones. Or do I?

What if we could automate it? What if we could use AI to read all the newsletters, find relevant information, and send us a summary once a week? Even better, what if instead of sending us yet another email, it could upload a file to Kindle? That would be cool. Let’s build it.

We need a place to run this automation. We could code the workflow in Python, but automation is supposed to save time. Therefore, we will use the make.com automation platform. It’s a no-code platform, allowing us to build automation using a visual editor. We can create the same workflow using Zapier, Automatisch, or any other Business Process Automation system.

Getting the Emails

For the sake of this article, let’s assume we are interested in information about startups, funding, and VCs. As a result, we want to receive a document containing tweet-sized summaries.

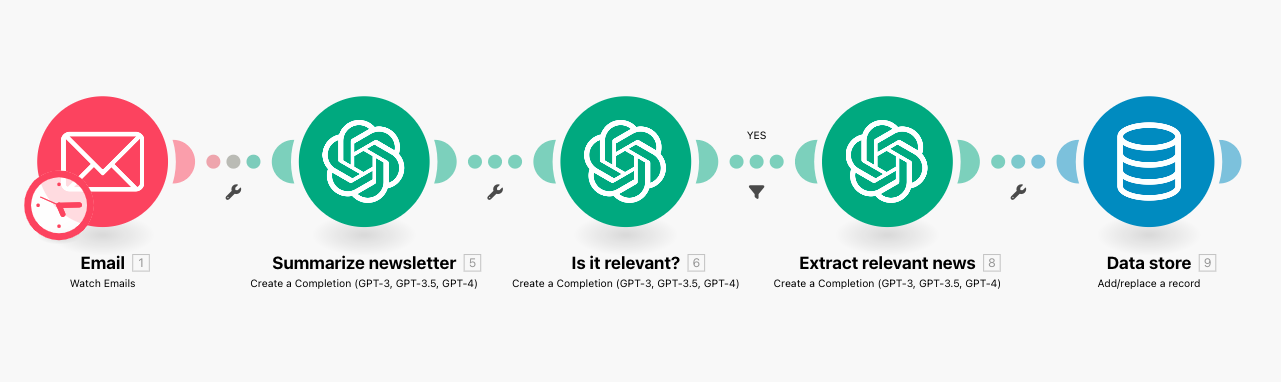

First, we have to setup a connection with the email provider and retrieve new emails.

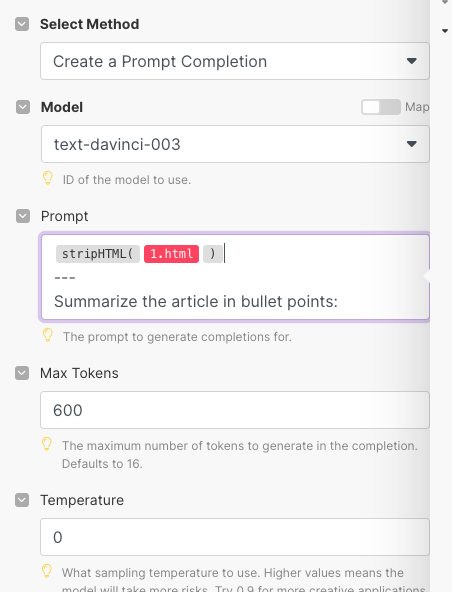

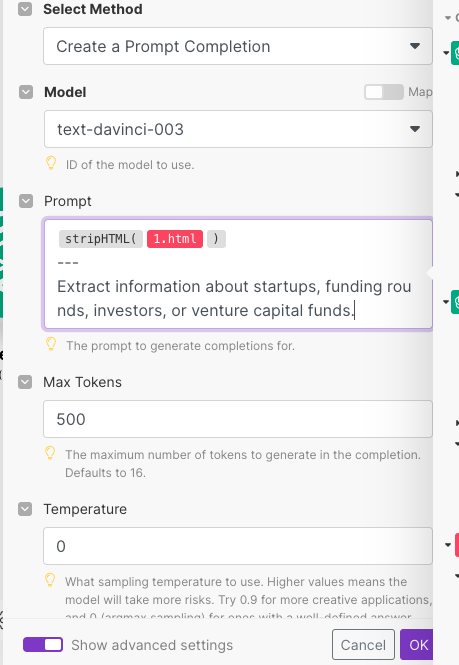

In the next step, we use OpenAI to summarize the content of the email. Before sending the text to OpenAI, we need to remove all HTML tags. We do it for two reasons. First, HTML is irrelevant to the task at hand because OpenAI cannot automatically download pictures and read data from them anyway. Second, we would exceed the token limit if we included everything.

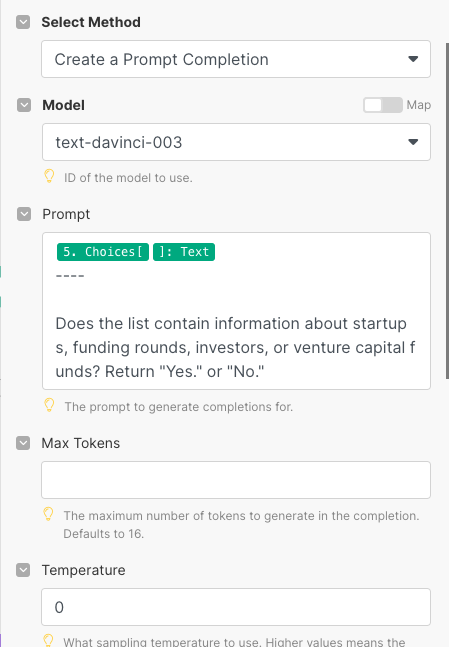

Now, we have a summary of the email. We will ask GPT-3 whether the summary contains anything of interest. Could we do it in a single prompt? Yes, but I have noticed I get better results if I split it into two steps.

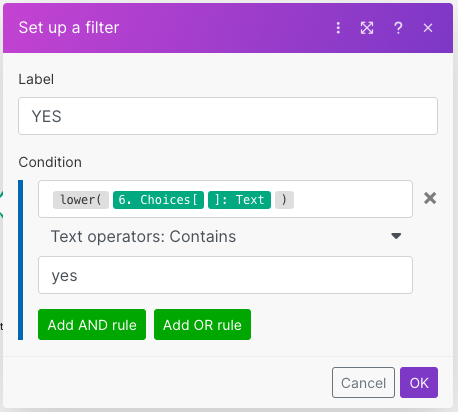

When we receive a response from the model, we use the result to decide whether the workflow should continue by checking whether the model’s response contains “yes.”

If the newsletter contains what we want, we should extract the relevant part from the entire email. We send the email to GPT-3 again. This time, we ask it to extract the relevant information. Why is it in a separate step? If the email doesn’t contain anything relevant, AI sometimes tries to extract some information even if we tell it to return “None” in such cases. Switching to a three-step process drastically increased the quality of the result.

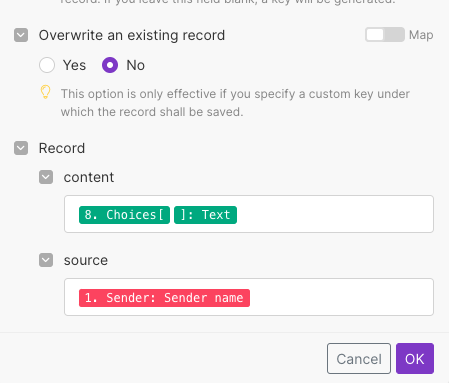

Finally, we store the result in a data store. We will need it later when we send the weekly summary.

Things to Consider While Using AI for Business Process Automation

We can allow AI models to be more random or make them deterministic using the temperature parameter. What is the right value? It depends on what we want to achieve. If we wanted the model to generate an email, we would prefer to make it less deterministic and more creative. Hence, we would set a higher temperature value. However, when we use the model to control automation or extract data, we want the same output every time we send the same input. Therefore, all of the AI interactions in this example have temperature set to 0.

Sending the Weekly Summary

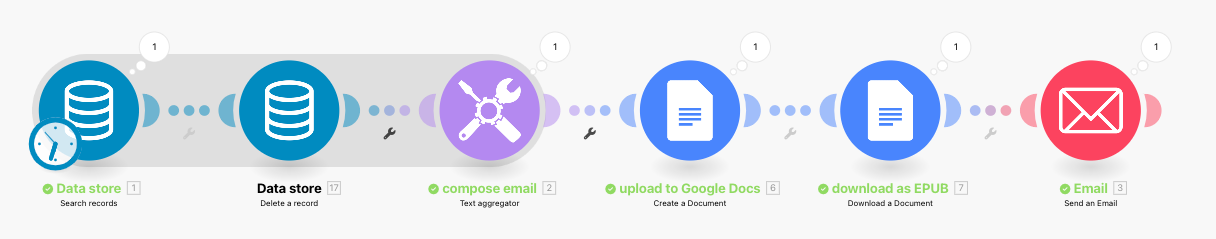

In a separate workflow, we have to retrieve the summaries from the datastore, compose the email, and send it to Kindle. In the end, we remove all existing summaries to avoid sending the same information twice.

Probably, we could find an online service that generates EPUB from a given text and has a connector for make.com, but let’s keep it simple. We already had a connection to Gmail configured, so we can upload a summary email as a new document to Google Docs. After creating a document, we can download it as an EPUB file.

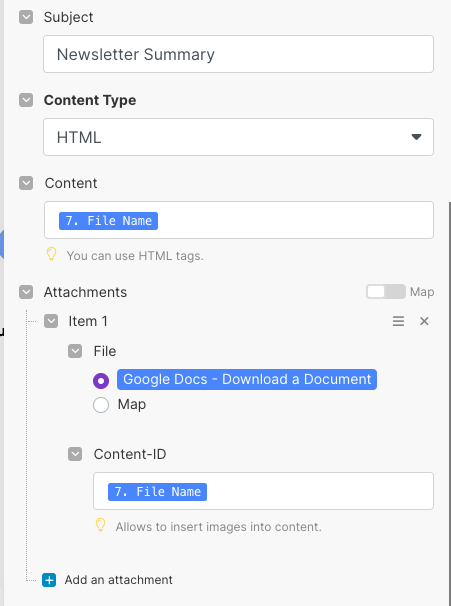

When we have the file, we can send it to Kindle. All we need to do is send the file as an attachment to a pre-configured address.

Amazon documentation says we can send an empty email, but it doesn’t work. If we send an empty email with an attachment, it will send us back an error saying our email had no attachment. The email needs content even if the content is HTML tags of an empty message.

In the end, we remove the summaries from the database. It happens in the end, even though deletion is the second operation in the workflow because the data source element in make.com supports transactions and doesn’t commit the deletion until all operations in the workflow are completed successfully.

Go From AI Janitor to AI Architect

Stop debugging unpredictable AI systems. I can help you build, measure, and deploy reliable, production-grade AI applications that don't hallucinate.

Message me on LinkedIn